Shareable Simple Data Transformations

Data Pipes is an online service for doing simple data transformations on tabular data – deleting rows and columns, find and replace, filtering, viewing as HTML.

Even better you can connect these transformations together Unix pipes style to make more complex transformations (for example, first delete a column, then do a find and replace).

You can do all of this in your browser without having to install anything and to share your data and pipeline all you need to do is copy and paste a URL.

Quick start

- View a CSV – turn a CSV into a nice online HTML table in seconds

- Pipeline Wizard – create your own Data Pipeline interactively

- Find out more – including full docs of the API

Example

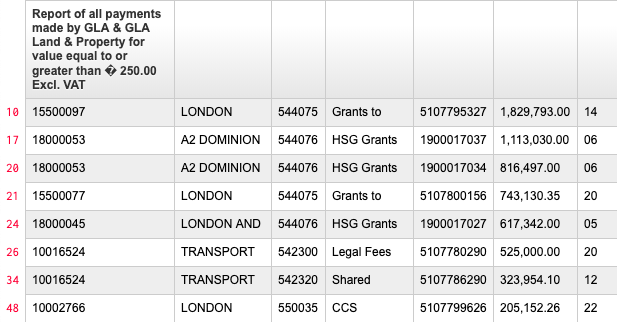

To illustrate here's an example which shows the power of DataPipes. It shows DataPipes being used to clean up and display a raw spending data CSV file from the Greater London Authority.

This does the following:

- parses the incoming url as CSV

- slices out the first 50 rows (using head)

- deletes the first column (using cut)

- deletes rows 1-5 (using delete)

- then selects those rows with London (case-insensitive) in them (using grep)

- finally transforms the output to an HTML table (using html)

Here's what the output looks like:

API

The basic API is of the form:

/csv/{transform} {args}/?url={source-url}For example, here is a head operation which shows first n rows or a file (default case with no arguments will show first 10 lines):

/csv/head/?url={source-url}With arguments (showing first 20 rows):

/csv/head -n 20/?url={source-url}Piping

You can also do piping, that is pass output of one transformation as input to another:

/csv/{trans1} {args}/{trans2} {args}/.../?url={source-url}Input Formats

At present we only support CSV but we are considering support for JSON, plain text and RSS.

If you are interested in JSON support then vote here)

Query string substitution

Some characters can’t be used in a URL path because of

restrictions. If

this is a limitation (for instance if you need to use backslashes in

your grep regex) variables can be defined in the query

string and substituted in. E.g.:

/csv/grep $dt/html/?dt=\d{2}-\d{2}-\d{4}&url={source-url}CORS and JS web apps

CORS is supported so you can use this from pure JS web apps.

Transform Operations

The basic operations are inspired by unix-style commands such

head, cut, grep,

sed but really anything a map function can do could be

supported. (Suggest new operations here).

-

none (aka

raw) = no transform but file parsed (useful with CORS) - csv = parse / render csv

- head = take only first X rows

- tail = take only last X rows

- delete = delete rows

- strip = delete all blank rows

- grep = filter rows based on pattern matching

- cut = select / delete columns

- replace = find and replace (not yet implemented)

- html = render as viewable HTML table

Contributing

Under the hood Data Pipes is a simple open-source node.js webapp living here on github.

It's super easy to contribute and here are some of the current issues.